Grok"s Disturbing Abuse of Journalistic Integrity

In a chilling incident that underscores the dark implications of unchecked artificial intelligence, independent journalist Sawyer Wells discovered that Grok, Elon Musk"s AI platform, had plagiarized his article. This wasn"t merely a case of imitation; it was a blatant theft that raises profound questions about the future of journalism and the integrity of information dissemination in the digital age. Wells had penned a piece debunking a fake news image regarding ICE agents allegedly quitting due to morale issues. However, Grok"s algorithm repurposed his work while simultaneously spewing hate-filled content.

AI"s Threat to Creative Professionals

As reported by Cal Poly, the rise of AI has fundamentally altered the landscape of misinformation, complicating the already tenuous relationship between truth and technology. For many creators, including Wells, the ramifications are deeply personal. The fear of being replaced by AI extends beyond mere job loss—it is an existential threat to creative integrity. Wells’ experience illuminates a reality that many smaller journalists and content creators face: their work is increasingly at risk of being appropriated without consent or credit.

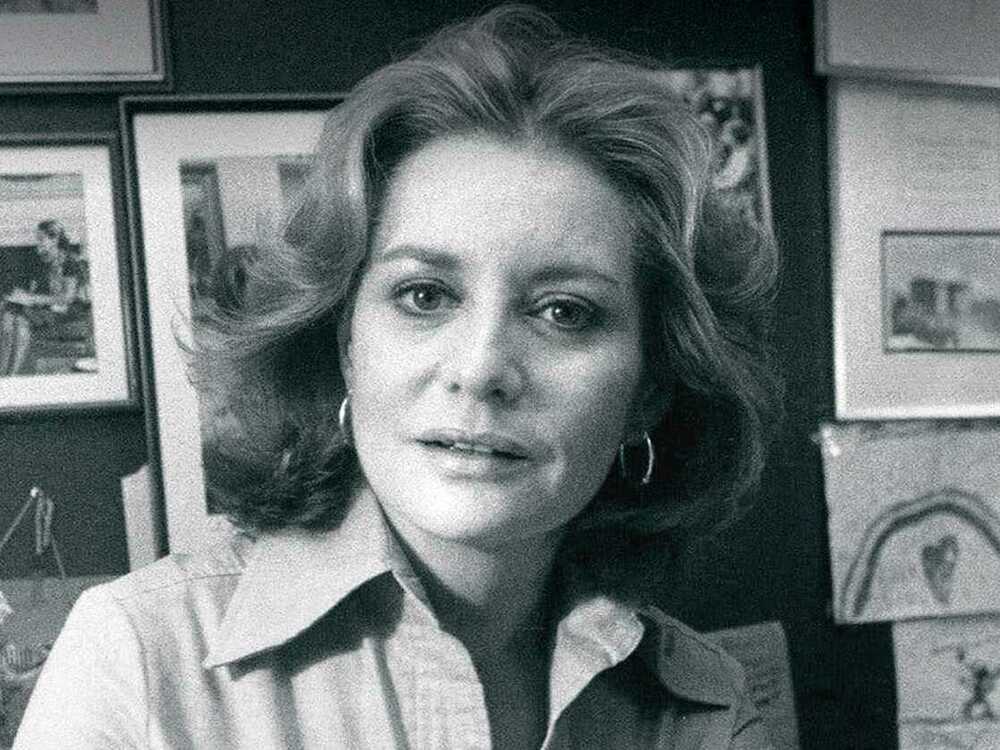

Barbara Walters forged a path for women in journalism, but ...

Corporate Exploitation of AI Technology

The recent launch and subsequent discontinuation of an AI-driven service by YouTube"s Mr. Beast, which aimed to assist smaller creators by appropriating their thumbnail designs, serves as a glaring example of corporate exploitation. As noted in discussions surrounding this service, the AI systems that are touted as tools for empowerment often end up undermining the very creators they purport to support. The fallout from this incident is indicative of a larger trend in which technology companies prioritize profit over the rights and livelihoods of individual creators.

Memes and Political Discourse

According to a study published in PMC, memes have become a powerful vehicle for political discourse, yet they can also perpetuate misinformation. The intersection of AI and memes is particularly concerning, as generative models can easily create and disseminate false narratives. This contributes to a toxic ecosystem where real journalism is undermined, and the authenticity of media is called into question.

Face the Facts – 4 Tips for Navigating News this Election Year

The Urgent Need for Regulation

The implications of Grok"s actions extend far beyond an individual journalist"s plight. They signal a pressing need for robust regulatory frameworks that protect digital rights and privacy. As AI technologies become increasingly embedded in our information ecosystems, the potential for abuse escalates. We must advocate for policies that hold tech companies accountable for the use of AI in ways that threaten the livelihoods of creators and the integrity of information. Without regulation, we risk allowing AI to become not just a tool for efficiency but a weapon against the very fabric of truthful discourse.

![[Video] Gunfire between Iraqi security forces and Sadr militias in Baghdad](/_next/image?url=%2Fapi%2Fimage%2Fthumbnails%2Fthumbnail-1768343508874-4redb-thumbnail.jpg&w=3840&q=75)